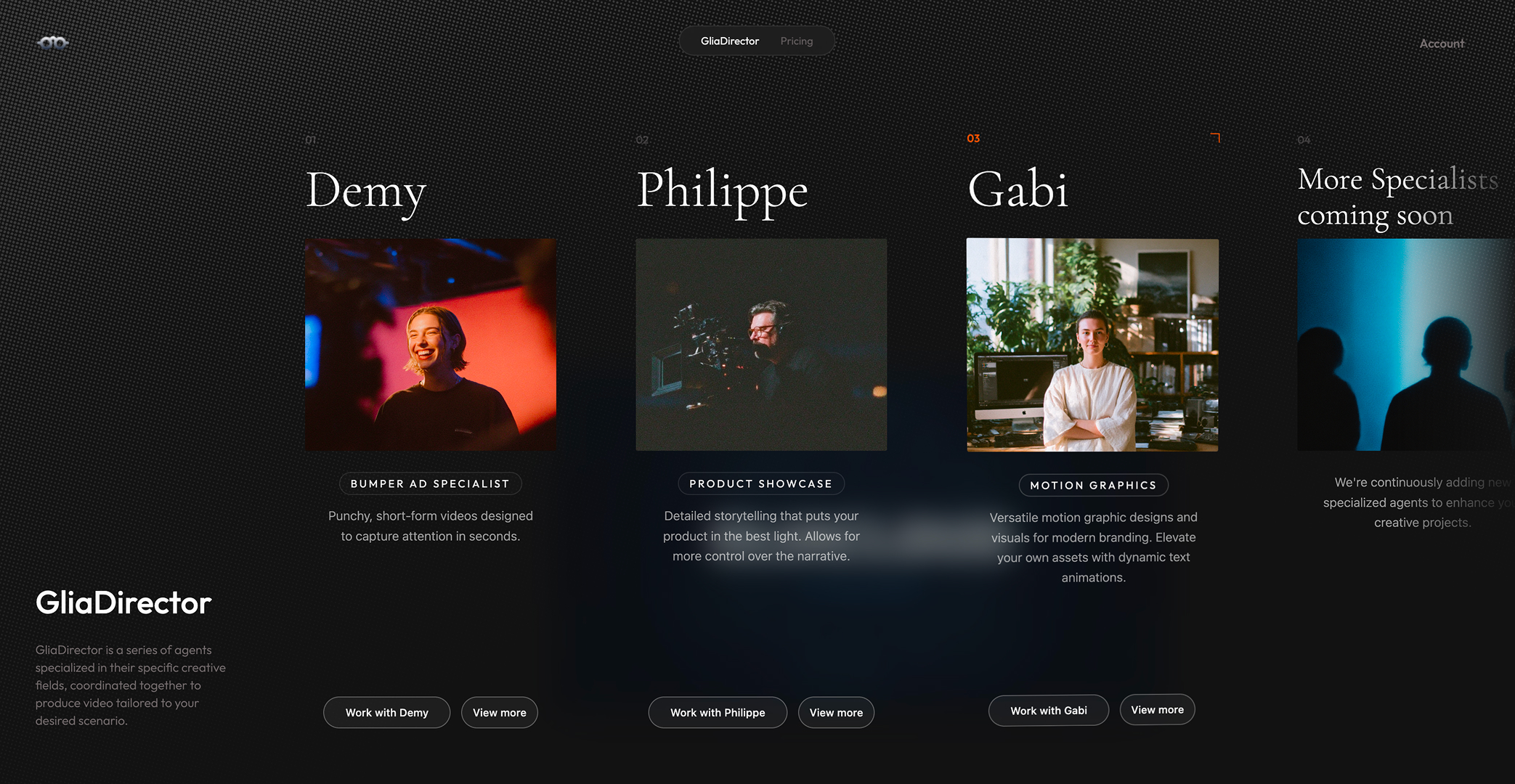

The Chat-Based AI Director represents a shift from prompt-and-pray video generation to a directed, conversational workflow. It addresses the gap between vague creative intent and specific output by enabling a 'Diverge & Converge' interaction model.

An ongoing prototype experimenting with various generative AI workflows, specifically aimed at SMB marketers who lack the ability and access to professional creative tools and know-how.

GliaCloud specializes in empowering the SMB and E-Commerce markets with content automation. This project represents the Lab Team's mission to explore future-facing workflows that address the specific constraints and needs of this user segment.

SMB Marketers & E-commerce Owners

The primary user is an SMB marketer or e-commerce owner. They are time-poor, lack formal design training, and find professional video tools intimidating. Their core pain points are: 1. The Blank Canvas: Not knowing where to start. 2. Vocabulary Gap: Inability to describe visual styles in 'prompt-speak.' 3. Consistency: Frustration when AI changes the wrong details during iteration.

The Blank Canvas Problem

Marketers for SMBs with e-commerce needs often face a 'Blank Canvas Problem.' They have a goal (e.g., 'sell this product') but lack the creative direction to write effective, detailed prompts. Their input is often too minimal: 'create a 15-second ad for me', resulting in generic AI outputs. Conversely, when they do have feedback, they lack the vocabulary to guide the AI effectively, leading to frustration when a regeneration changes the entire scene rather than just the desired element.

Diverge & Converge

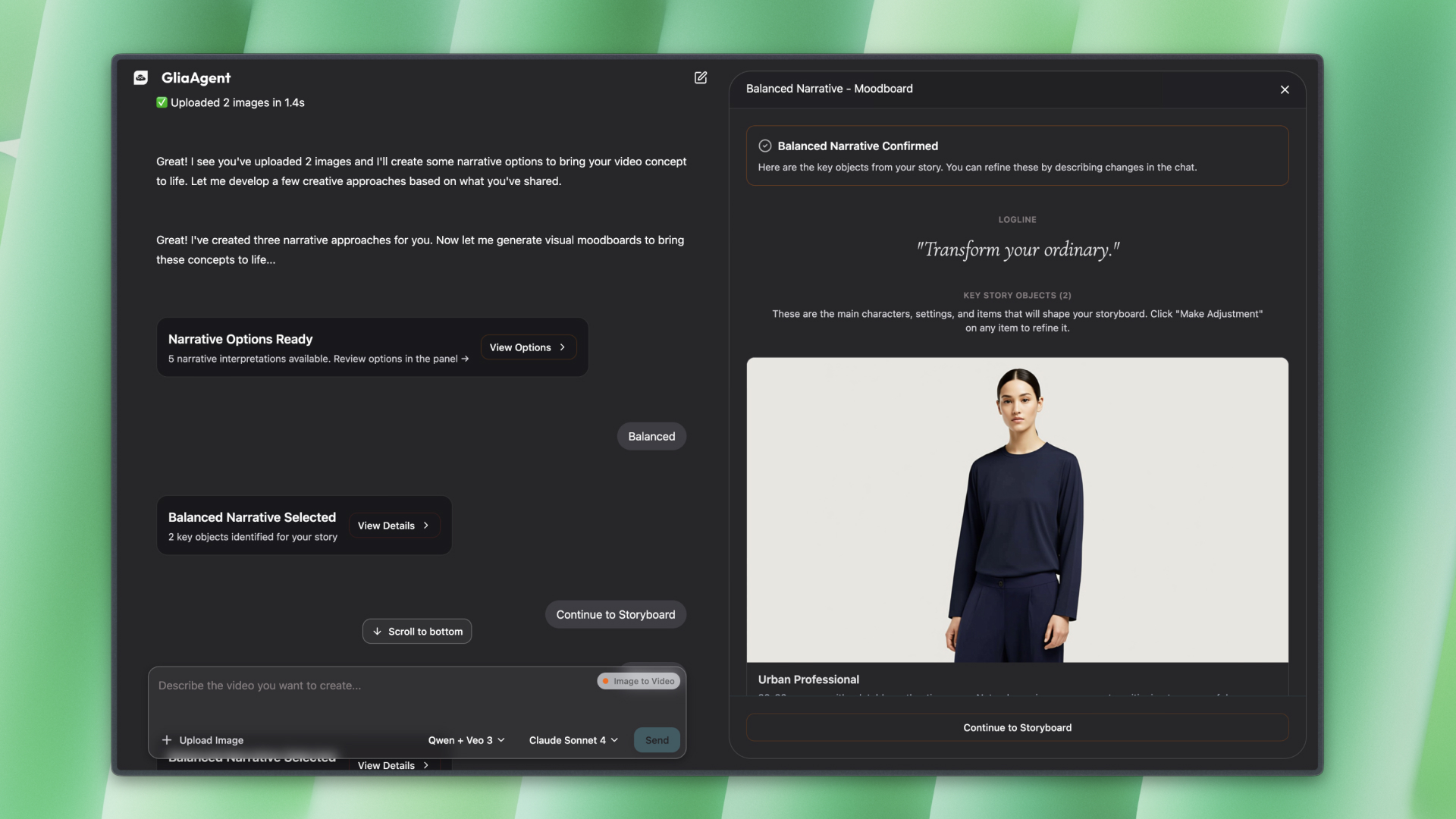

To address this, we deconstructed the video creation process into discrete, manageable milestones aligned with current GenAI capabilities. Leveraging the generative model's capacity for exploration, we present users with high-level 'checkpoints'—narrative beats packaged with visual references. This structure transforms the overwhelming blank canvas into a series of curated choices, allowing users to steer the creative direction confidently without getting lost in the noise of infinite possibilities.

Narrative checkpoint presented as options for user selection.

Context Engineering

We developed a Context Managing pipeline. We extract 'Main Narrative Items' (like a specific product) and generate a 'Set Image' reference for them. This reference is then used to condition all subsequent video clip generation. This allows for granular control: if a user dislikes the look of a prop, they can change just that reference image, and the entire video sequence can be regenerated with the new look while maintaining the narrative flow.

Key objects like protagonists and products are extracted first as global style references. Once users made the changes to these key objects, the rest of the scene updates accordingly to maintain consistency.

Insight

"Users are more comfortable acting as 'Directors' who choose from options than 'Writers' who must create from scratch. By effectively managing the 'reference set' separately from the 'narrative flow,' we can give non-creative users the feeling of granular control without requiring them to be prompt engineers."

Next Steps

MVP coming soon